At Thamestechai, we strive to help you protect your AI systems by creating solutions that are intelligent, secure, and resilient. One of the most critical challenges in AI security today is the threat of Input Manipulation Attacks. These attacks exploit vulnerabilities in machine learning (ML) models by subtly altering input data, often with significant consequences. This blog dives deep into understanding these attacks, their implications, and how we can defend against them.

What Are Input Manipulation Attacks?

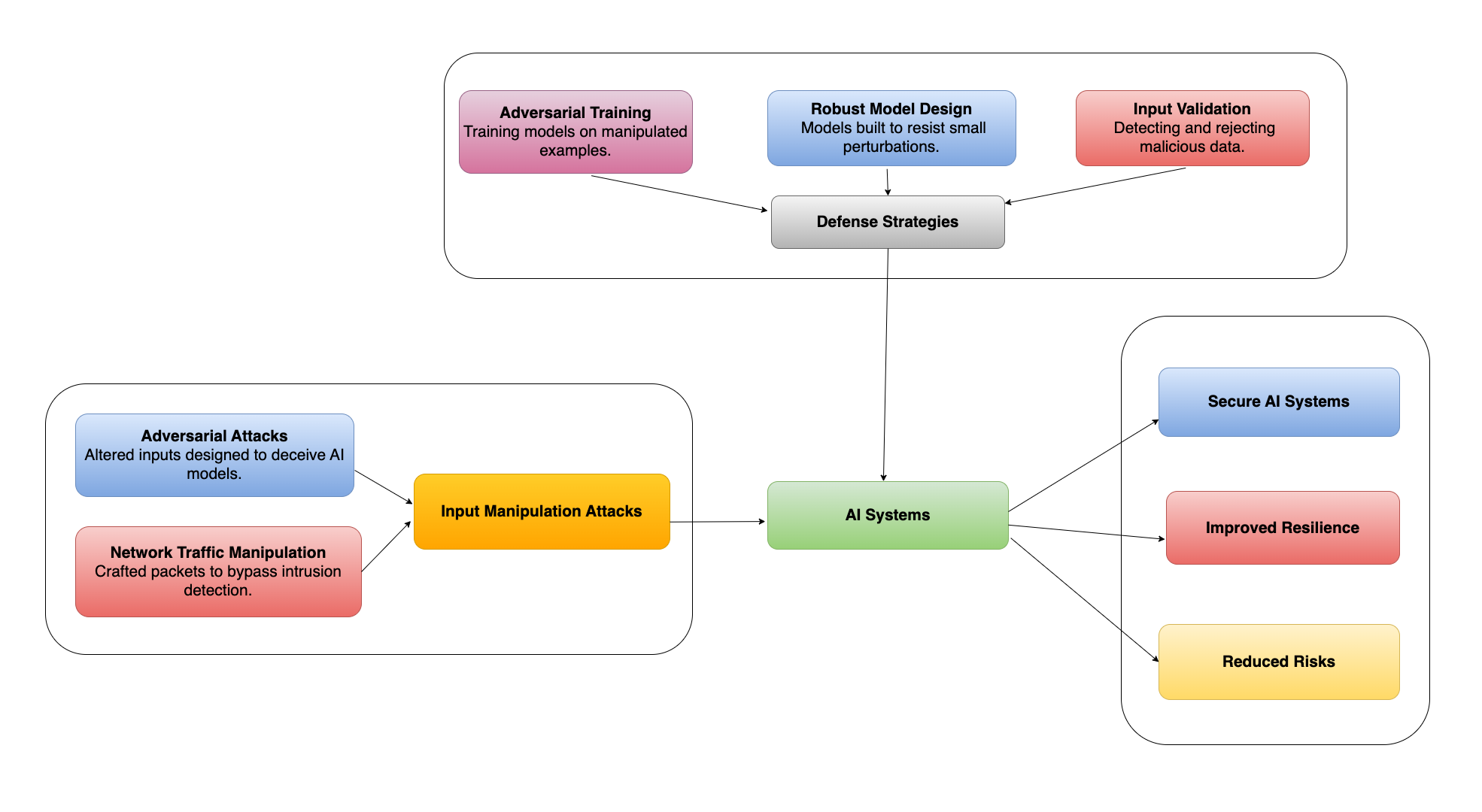

Protecting your AI systems from Input Manipulation Attacks involves understanding how attackers deliberately alter input data to deceive machine learning models. A common variant of these attacks is Adversarial Attacks, where small, carefully crafted changes to input data—often imperceptible to humans—cause the model to make incorrect predictions or classifications.

These attacks highlight a critical weakness in current AI systems: their susceptibility to maliciously designed data.

Key Characteristics of Input Manipulation Attacks:

-

Subtlety: Changes are often too small for humans to detect.

-

Targeted Impact: Designed to exploit specific vulnerabilities in a model.

-

Broad Applicability: Can target various domains, from image recognition to cybersecurity systems.

For a deeper understanding, refer to the seminal work on adversarial examples by Goodfellow et al. (2014), which introduced these vulnerabilities in machine learning systems.

Real-World Examples

1. Image Classification Manipulation

In an image classification system, a model trained to differentiate between cats and dogs can be fooled by an adversarial image. For example, an image of a cat can be subtly altered so that the model misclassifies it as a dog. While the image looks like a cat to a human observer, the manipulated data deceives the model, potentially undermining trust in AI-driven decisions.

A real-world example of this can be seen in autonomous vehicles. Researchers have demonstrated that minor alterations to road signs—invisible to drivers—can cause AI systems to misinterpret them, leading to dangerous outcomes (Eykholt et al., 2018).

2. Network Traffic Evasion

Machine learning is widely used in intrusion detection systems (IDS). An attacker can craft malicious network packets to bypass an IDS by altering features like the source IP address or payload data. For instance, encrypting the payload or routing traffic through a proxy server can help attackers evade detection. This poses significant risks in environments where data integrity is critical.

Why These Attacks Matter

Input manipulation attacks are more than theoretical risks; they have real-world implications. First, attackers with knowledge of deep learning and image processing can craft manipulative inputs with minimal effort. Moreover, manipulated inputs often look legitimate, making it difficult for traditional monitoring systems to flag them. Finally, misclassification or evasion can lead to system failures, data breaches, or even physical harm in applications like healthcare or autonomous systems.

-

Ease of Exploitation: Attackers with knowledge of deep learning and image processing can craft manipulative inputs with minimal effort.

-

Detection Challenges: Manipulated inputs often look legitimate, making it difficult for traditional monitoring systems to flag them.

-

High Impact: Misclassification or evasion can lead to system failures, data breaches, or even physical harm in applications like healthcare or autonomous systems.

For more insights, consider reviewing “Adversarial Attacks and Defenses in Artificial Intelligence” (Yuan et al., 2019).

Defense Strategies

Securing AI systems against input manipulation attacks requires a proactive, multi-layered approach. Below are some of the most effective defenses:

1. Adversarial Training

This method involves exposing the model to adversarial examples during training. By doing so, the model learns to recognize and resist these manipulative inputs. A notable implementation is found in “Towards Robust Deep Learning Models with Adversarial Training” (Madry et al., 2018).

2. Robust Model Design

Designing models to be inherently resilient to small perturbations is critical. Techniques like defensive distillation, gradient masking, and certified defenses can make models less susceptible to adversarial attacks.

3. Input Validation

Implementing input validation mechanisms can help detect and reject malicious inputs. Techniques such as anomaly detection, statistical analysis, and monitoring for unexpected patterns can significantly reduce the risk of attacks. For instance, combining input validation with domain-specific knowledge enhances its effectiveness.

Challenges in Defense

While the strategies above are promising, implementing them is not without difficulties. First, adversarial training and robust model architectures can significantly increase resource requirements. Additionally, overzealous input validation may mistakenly block legitimate inputs, affecting usability. Finally, attack techniques evolve rapidly, necessitating continuous research and updates to defense mechanisms.

-

Computational Cost: Adversarial training and robust model architectures can significantly increase resource requirements.

-

False Positives: Overzealous input validation may mistakenly block legitimate inputs, affecting usability.

-

Evolving Attack Vectors: Attack techniques evolve rapidly, necessitating continuous research and updates to defense mechanisms.

For a broader view on these challenges, refer to “The Limitations of Adversarial Training” (Tramèr et al., 2020).

Thamestechai’s Approach to AI Security

At Thamestechai, we are dedicated to advancing AI systems that are as secure as they are intelligent. Our approach includes:

Advanced Adversarial Training: Incorporating cutting-edge techniques to improve model resilience.

Robust Architectures: Leveraging state-of-the-art methods to design models resistant to manipulation.

Rigorous Validation: Implementing multi-layered validation processes to detect and mitigate threats effectively.

We collaborate with industry leaders and academic researchers to stay ahead of evolving threats and ensure that our solutions meet the highest security standards.

For more on our commitment to AI security, explore our AI Security Solutions page.

Conclusion

Input Manipulation Attacks are a significant challenge in the world of AI, but with the right strategies and continuous innovation, we can build systems that are secure, trustworthy, and reliable. At Thamestechai, we are committed to leading the charge in AI security, ensuring our technologies remain robust against emerging threats.

For more information or to partner with us in creating secure AI systems, reach out today. Together, we can build a future where AI serves humanity safely and effectively.

#AI #Cybersecurity #MachineLearning #AdversarialAttacks #AIInnovation